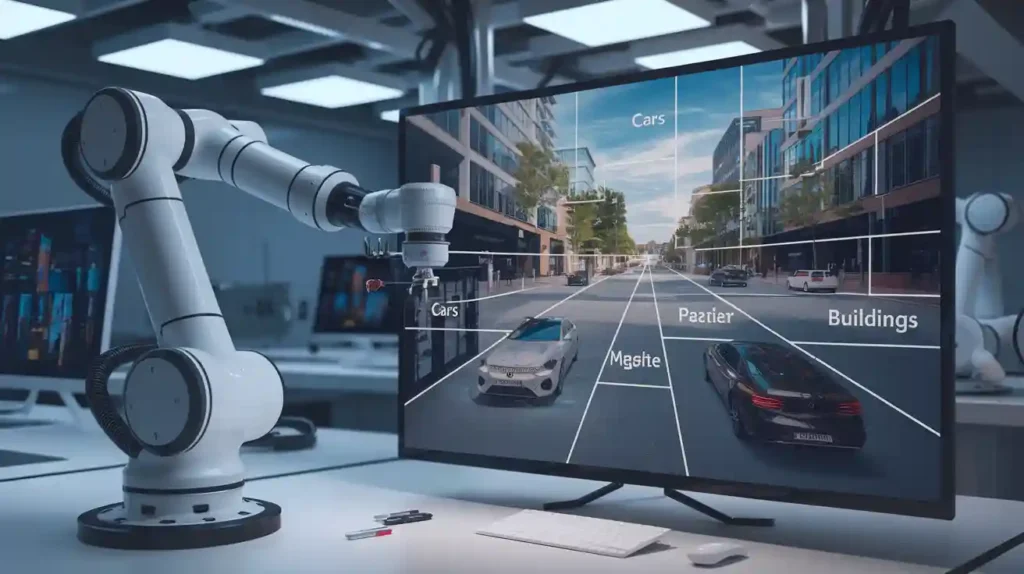

Imagine walking through a bustling city street. Your eyes instantly recognise buildings, cars, people, and trees — not as scattered pixels, but as distinct entities woven into a coherent scene. Semantic segmentation brings this same ability to machines. It’s not just about identifying objects; it’s about understanding their exact boundaries, textures, and relationships — pixel by pixel. In the world of artificial intelligence, this is how machines learn to see rather than merely look.

The Brushstrokes Behind Machine Vision

Think of semantic segmentation as an artist painting a digital masterpiece. Instead of using brushes, it uses algorithms that colour each pixel based on what it represents — sky, road, vehicle, or human. It’s like assigning every speck of colour a purpose. Unlike traditional object detection, which draws boxes around items, semantic segmentation dives deeper, capturing the nuanced boundaries between them.

In real-world scenarios, this technique becomes the backbone of systems that require precise environmental awareness — from autonomous vehicles navigating crowded streets to medical imaging models identifying cancerous cells. To build such capabilities, professionals often start by mastering image processing fundamentals in a structured learning environment, such as a Data Science course in Pune, where deep learning and computer vision form the canvas for innovation.

The Architecture of Understanding

Behind the elegance of pixel-level classification lies a network architecture crafted with precision. Convolutional Neural Networks (CNNs) form the base, extracting features like edges, textures, and shapes from raw images. However, CNNs are inherently designed for recognition, not localisation. That’s where fully convolutional networks (FCNs) and encoder-decoder models step in.

Encoders compress the image, distilling essential information into abstract representations. Decoders then rebuild this compressed data into a detailed segmentation map, ensuring each pixel finds its rightful place in the visual hierarchy. Architectures like U-Net and DeepLab have revolutionised this process, offering a balance between detail preservation and computational efficiency.

These models work much like translators — converting the language of raw pixels into semantic meaning. For instance, when a drone scans farmland, the algorithm doesn’t just see colours; it distinguishes healthy crops from diseased ones, allowing for targeted interventions. Such advancements owe much to interdisciplinary knowledge gained through structured education, such as a Data Science course in Pune, where students learn to blend theory with experimentation.

Training the Model: From Chaos to Clarity

Every great artist trains their eye to perceive patterns, and so do machines. Training a semantic segmentation model involves feeding it thousands of labelled images, each pixel tagged with the correct category. This painstaking process, often called data annotation, is vital — it’s the bridge between randomness and recognition.

However, this journey isn’t without hurdles. The model must learn to differentiate subtle differences, like shadows versus surfaces or reflections versus objects. Data imbalance, occlusion, and variations in lighting can easily confuse the algorithm. Techniques such as data augmentation, transfer learning, and class-weight balancing help overcome these challenges. It’s a process of continual refinement — not unlike sculpting, where each pass of the chisel brings the form into sharper focus.

Real-World Applications: From Roads to Operating Rooms

Semantic segmentation’s impact stretches across industries. Autonomous driving allows vehicles to distinguish between pedestrians, lanes, and obstacles in real-time, ensuring safety in unpredictable environments. In healthcare, it transforms diagnostics — enabling machines to highlight tumours, measure organ volumes, and assist surgeons with unparalleled precision.

Agriculture benefits through satellite imagery analysis that maps soil health, predicts yields, and detects pests. Urban planners use it to monitor construction progress, deforestation, and flood-prone areas. Even in entertainment, it powers visual effects pipelines, replacing backgrounds and adjusting lighting with pixel-perfect accuracy.

Each of these applications illustrates how far we’ve come from mere image recognition to full-scene understanding — a journey driven by the evolving synergy between data, algorithms, and human creativity.

The Future of Seeing: Context is the New Clarity

The next frontier for semantic segmentation lies in contextual and instance-level understanding. While current models classify pixels, they often miss the context — the “why” behind what’s being seen. Integrating semantic segmentation with scene graph modelling and attention mechanisms can unlock deeper reasoning capabilities.

Edge computing and real-time deployment will also play key roles, allowing devices like drones and robots to process segmentation maps on the fly. Additionally, combining semantic and instance segmentation into panoptic segmentation creates a unified view — identifying both object boundaries and their individual instances simultaneously.

As we move forward, the boundaries between vision and comprehension blur. Machines will not only identify objects but interpret scenes, emotions, and even intent — taking us closer to an era where artificial vision mirrors human perception.

Conclusion: Painting the Future Pixel by Pixel

Semantic segmentation is more than a technical process; it’s an artistic and cognitive leap. By teaching machines to recognise every pixel’s role in the bigger picture, we inch closer to bridging the gap between perception and understanding. Whether guiding self-driving cars or aiding doctors in diagnosis, it represents the evolution of computer vision from seeing to comprehending.

In many ways, this field reminds us that intelligence — human or artificial — begins with observation, but proper understanding lies in interpretation. And for those ready to explore this frontier, mastering the science behind these digital brushstrokes could start with the appropriate foundation, transforming curiosity into creation, one pixel at a time.